From Data Protection to AI Security Posture Management: What I’ve Learned as an Early Advisor to TrustLogix

Why CIOs and CISOs Must Treat AI Security Posture Management as the Next Strategic Imperative for Data Governance and Enterprise Resilience

When I first joined TrustLogix as one of its early board advisors, the challenge we were tackling felt deceptively narrow: securing data in use for CI/CD pipelines. It was about ensuring that sensitive customer and enterprise data wasn’t misused or left vulnerable during the development process. At the time, this was a bold move—shifting the industry conversation from static data-at-rest protections toward the live, moving, constantly changing streams of data that powered modern engineering.

Fast forward a few years, and the landscape looks very different. What began as a fight to control developer pipelines has evolved into a much larger, more urgent problem: safeguarding data privacy, security, and governance in the age of generative AI. Today, that evolution is crystallizing into a new strategic discipline: AI Security Posture Management (AI-SPM).

The Inflection Point for CxOs

The adoption of generative AI and large language models (LLMs) is not optional anymore. McKinsey estimates that generative AI could deliver up to $4.4 trillion annually in economic value across industries. A Salesforce study shows that 61% of employees are eager to leverage generative AI, yet most lack the knowledge or skills to use it securely.

That tension—between enthusiasm and operational readiness—is where risk breeds. On one hand, boards and executive teams see AI as a lever for efficiency and competitive advantage. On the other, CISOs and CIOs are already grappling with a new class of threats, from data leakage to adversarial attacks, without the benefit of well-worn playbooks.

The next 18 months will determine whether enterprises harness AI’s potential responsibly or stumble into costly missteps. And the stakes are high: regulators are moving quickly, consumers are hyper-aware of privacy risks, and adversaries—both criminal and nation-state—are actively probing weaknesses in AI systems today, not tomorrow.

From Governance Gaps to Strategic Imperatives

One of the biggest lessons I’ve seen firsthand is that the governance gaps inside enterprises are often more dangerous than the technology itself.

Security vs. Data Teams: Too often, the security organization and the data organization operate in silos. Without a shared governance framework, critical questions—Who owns the data? Who sets the policies? Who enforces them?—go unanswered. This disconnect is where vulnerabilities flourish.

Super Users Without Guardrails: Generative AI has effectively turned non-technical employees into “super users.” With the right (or wrong) prompt, an employee could trigger a destructive query like

drop table, leading to catastrophic data loss. Traditional access controls weren’t built for this.Model Integrity Risks: Nearly every LLM today is vulnerable to prompt injection and manipulation. What looks like an innocent request for analysis can be hijacked to exfiltrate sensitive data or generate biased, harmful, or even malicious outputs.

The result? An urgent need for proactive, executive-level strategies—not just tactical fixes.

The Three Pillars of AI Security Posture Management

At TrustLogix, I’ve watched the thinking around governance evolve into what we now call AI-SPM: AI Security Posture Management. It’s a high-level discipline designed to give enterprises the same kind of control, resilience, and visibility for AI that they’ve long pursued in cloud and DevOps.

The framework rests on three pillars:

Proactive Data Protection

Automatic discovery and classification of sensitive data across AI training and inference pipelines.

Data lineage tracking to ensure auditability and reproducibility.

Granular access controls (RBAC, ABAC) tailored for AI workloads.

Secure Model Lifecycle Management

Model registries with strict access controls and full audit trails.

Integrity verification using digital signatures and cryptographic checks.

Real-time monitoring to detect adversarial attacks and anomalous behavior.

Continuous Posture Monitoring

Centralized visibility into who has access to what data and models.

Automated, template-driven policy enforcement.

Continuous risk detection against benchmarks like NIST and CIS.

This isn’t theory—it’s the pragmatic blueprint enterprises need to operationalize today. Just as cloud security posture management (CSPM) became indispensable for cloud adoption, AI-SPM is fast becoming the non-negotiable foundation for AI.

Why the Next 18 Months Matter

CxOs can’t afford to wait. Here’s why:

Regulatory Momentum: The EU AI Act, U.S. executive orders, and state-level privacy regulations are converging to place heavy accountability on AI use. Compliance won’t be optional.

Adversary Sophistication: Organized crime and nation-state actors are already targeting LLMs and AI-enabled applications. Unlike early-stage technologies, this isn’t “wait and see.” The battlefield is live.

Market Expectations: Customers and investors are paying close attention. A single AI-driven data leak could undo years of trust-building and destroy competitive positioning.

The organizations that will thrive are those that move deliberately—implementing governance frameworks now, before AI adoption scales beyond their ability to control it.

A Real-World Wake-Up Call: The Salesloft Breach

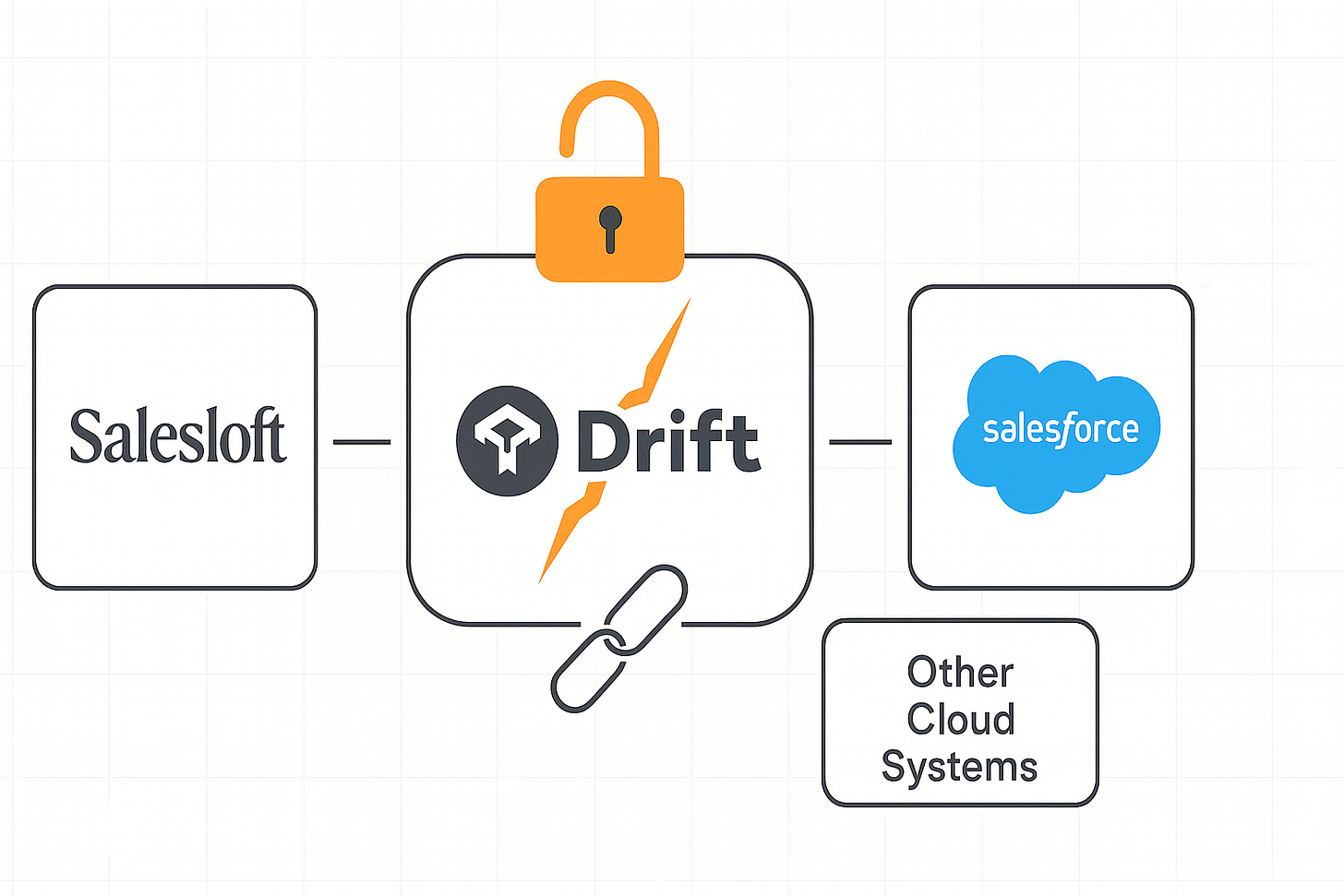

In September 2025, the industry saw just how fast trust can unravel when AI security posture isn’t managed proactively. A breach at Salesloft, an AI-driven chatbot provider, exposed the fragility of enterprise integrations at global scale.

Attackers from the UNC6395 group stole OAuth tokens from Salesloft’s Drift platform, using them to pivot into hundreds of downstream integrations. This wasn’t just a contained incident—it spread into enterprise systems like Slack, Google Workspace, AWS, Microsoft Azure, and even OpenAI environments. Along the way, attackers harvested AWS keys, VPN credentials, and Snowflake tokens, and then deleted logs to cover their tracks (KrebsOnSecurity, ITPro).

The impact was sweeping. Security leaders at firms like Palo Alto Networks and Zscaler confirmed their organizations were affected, reminding us that even cybersecurity vendors aren’t immune (TechRadar, ITPro).

Why it matters: This was a classic case of authorization sprawl—unchecked AI-integrated tokens giving adversaries the keys to the kingdom. For executives, the lesson is crystal clear: AI governance cannot lag adoption. A single data leak or compromised token can wipe out years of trust-building and competitive advantage in a matter of days.

A Call to Action for Leaders

As I look back on my journey with TrustLogix, the throughline is clear: security and governance are not blockers to innovation; they are the enablers of sustainable, responsible AI adoption.

CxOs need to think differently. This isn’t about securing yesterday’s systems. It’s about preparing your enterprise to navigate the next wave of disruption with confidence. That requires new disciplines, new governance models, and new partnerships.

If you’re a CIO, CISO, or senior executive wrestling with these questions, I’d encourage you to take action now:

Schedule a private briefing or demo. I’m happy to arrange a session where you can see firsthand how TrustLogix is helping enterprises operationalize AI-SPM.

Connect directly. Reach out to me if you’d like to discuss your specific challenges, roadmap, or board-level concerns.

Learn more. Visit TrustLogix’s website for additional resources and insights.

Final Word

AI adoption is moving faster than most governance structures can keep up with. The temptation is to prioritize speed and deal with governance later. That’s a mistake.

What I’ve learned as an early advisor to TrustLogix is that governance isn’t the brake—it’s the steering wheel. Without it, you may move fast, but you’ll end up in a ditch. With it, you can accelerate into the future of AI confidently, knowing your enterprise is secure, compliant, and ready for what’s next.

The question isn’t whether AI-SPM will become a strategic priority for enterprises. It’s how quickly your organization will adopt it—and whether you’ll be ahead of the curve or playing catch-up.

👉 Call to Action: Contact me directly if you’d like to arrange a private briefing, demo, or meeting with a representative from TrustLogix. Or visit trustlogix.io to explore more.

References

McKinsey & Company. (2023, June). The economic potential of generative AI: The next productivity frontier. McKinsey Digital. Retrieved from https://www.mckinsey.com/capabilities/mckinsey-digital/our-insights/the-economic-potential-of-generative-ai-the-next-productivity-frontier

McKinsey Global Institute. (2023, June). Generative AI could add up to $4.4 trillion annually to the global economy. Retrieved from https://www.mckinsey.com/mgi/media-center/ai-could-increase-corporate-profits-by-4-trillion-a-year-according-to-new-research

Salesforce Research. (2023, August). Generative AI Snapshot Series: AI Ethics. Salesforce Newsroom. Retrieved from https://www.salesforce.com/news/stories/generative-ai-ethics-survey/

Salesforce Research. (2023, October). Generative AI Snapshot Series: AI Skills. Salesforce Newsroom. Retrieved from https://www.salesforce.com/news/stories/generative-ai-skills-research/

Forbes India Staff. (2023, July 14). Generative AI could add up to $4.4 trillion a year to global economy: McKinsey. Forbes India. Retrieved from https://www.forbesindia.com/article/news/generative-ai-could-add-up-to-44-trillion-a-year-to-global-economy-mckinsey/86157/1